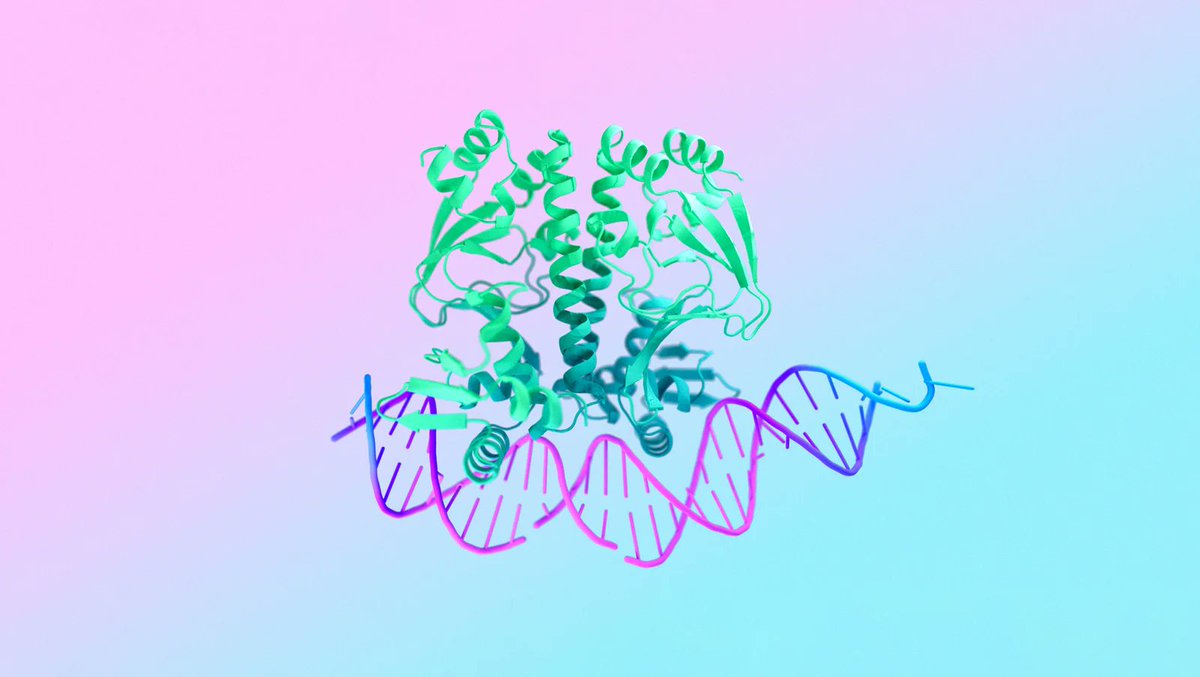

Top voices like Google DeepMind and Isomorphic Labs have come together to announce AlphaFold 3, a cutting-edge AI model that can accurately predict the structures and interactions of proteins, DNA, and RNA. This breakthrough in biology has the potential to revolutionize drug discovery and understanding of complex biological systems.

- Google DeepMind and Isomorphic Labs collaborate on AlphaFold 3 AI model for predicting molecular structures

- Model accurately predicts structures and interactions of proteins, DNA, and RNA

- Potential to revolutionize drug discovery and advance understanding of complex biological systems

LMsys report reveals that duplicate prompts & highly active users do not significantly affect Llama 3’s win rate, emphasizing the model’s robust performance. Additionally, Llama 3-70b ranks on par with top models in Chatbot Arena, as highlighted in the analysis by Together AI. Maziyar PANAHI updates GGUF models for Llama-3-70B for improved quality.

- LMsys report confirms Llama 3’s strong performance despite duplicate prompts and active users

- Llama 3-70b model matches top-ranked models on Chatbot Arena, as per Together AI’s analysis

- Maziyar PANAHI enhances GGUF models for Llama-3-70B for improved quality

Top voices like Weights & Biases, PyTorch, Together AI, Supabase, and more are coming together at the GenAI x Open Source meetup in SF on Monday 5/13. Limited spots available, RSVP now to join the conversation with industry experts like @ClementDelangue and @jamiedg.

- Top voices in AI like Weights & Biases, PyTorch, and Together AI are hosting a meetup in SF

- Join industry experts like @ClementDelangue and @jamiedg for rapid fire intros and discussions

- Limited spots available, RSVP now to secure your spot at the event

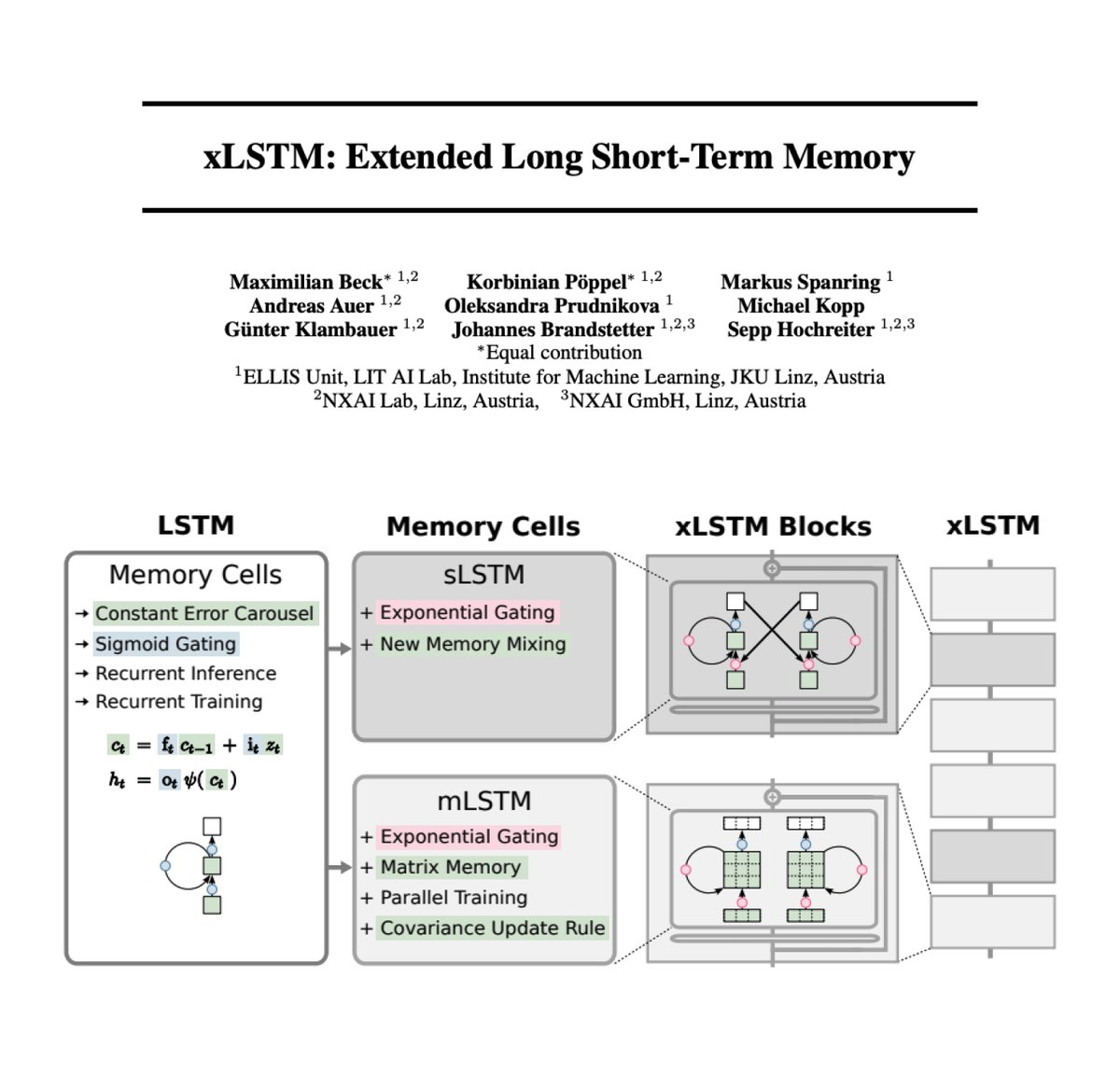

Top voices in AI research, including Aran Komatsuzaki and Johannes Brandstetter, are buzzing about xLSTM: Extended Long Short-Term Memory. This groundbreaking technique scales LSTM to billions of parameters, improves memory capacities, and introduces exponential gating. The latest techniques from modern LLMs are leveraged to mitigate limitations of LSTMs.

- xLSTM enhances LSTM with exponential gating and scalable memory capacities.

- Key technique involves modifying LSTM memory structure for improved performance.

- Comparison with State-of-the-Art LLMs shows xLSTM’s potential in language modeling.

- While currently slower than FlashAttention and Mamba, optimizations could make xLSTM as fast as transformers.

- Published paper by Beck et al. provides in-depth insights: https://arxiv.org/abs/2405.04517

Discover the latest advancements in LLM inference acceleration with Intel’s P-Cores, Mistral.rs serving for local LLMs, Microsoft BitNet 1.58Bit for Apple Silicon, and llamafile for AVX2 processing. PyTorch introduces Triton Kernels for FP8 inference efficiency.

- Intel’s P-Cores optimize Lama.cpp programs for enhanced LLM inference experience

- Mistral.rs offers fast serving for local LLMs with features like Flash attention v2 and prefix caching

- Microsoft BitNet 1.58Bit implemented on Apple Silicon for efficient edge device usage

- llamafile accelerates K quants processing on AVX2, thanks to new kernels from Iwan Kawrakow

- PyTorch introduces TK-GEMM Triton kernel for accelerated FP8 GEMM inference

Industry experts like Bindu Reddy and Santiago discuss the growing adoption of Language Model (LLM) in real businesses, with a focus on building AI pipelines and trustworthy virtual assistants. Pete Hunt and Yuhan Luo highlight the practical applications of LLMs in production software development.

- Bindu Reddy and Santiago emphasize the importance of LLM adoption in real businesses for AI pipelines and virtual assistants.

- Pete Hunt and Yuhan Luo showcase how businesses are building production software on LLMs.

- MonicaSLam discusses using LLMs for specific tasks and the composition of narrow capabilities.

Top voices in AI industry warn of the dangers of centralized power in AI, advocating for democratization and decentralization. Concerns also raised about the potential national security threats posed by adversarial nations funding AI opposition.

- AI experts warn of dangers of centralized power in AI industry

- Calls for democratization and decentralization of AI to prevent dystopia

- Concerns raised about national security threats from adversarial nations funding AI opposition

Prominent figures in the AI community like Bindu Reddy and Andrew Ng are clashing over the future of open source in AI development. While some advocate for open source innovation, others like OAI are proposing extreme security measures to lock down model weights.

- The AI community is divided over the future of open source in AI development

- Prominent figures like Bindu Reddy and Andrew Ng clash over the issue

- OAI proposes extreme security measures to lock down model weights, sparking debate

- Open source advocates argue for innovation and transparency in AI development

Top voices in AI like Liv Boeree, AIPowers, and Cohere For AI are participating in discussions on the impact of AI on content generation and cultural inclusion at various events. From AMA sessions to workshops, experts are sharing insights and perspectives on the role of AI in shaping diverse content and education environments.

- Top voices like Liv Boeree and AIPowers to participate in discussions on AI impact at events like ICLR Conference

- Topics include cultural inclusion in generative AI and dehumanization and bias in AI technology

- Experts like Sarahookr and alexhanna to lead discussions on important AI-related issues

- Events hosted by organizations like RoundtableSpace, IAI_TV, and AiCultures

Fei-Fei Li highlights Stanford’s Natural Language computing lab’s limited access to GPUs, sparking debate on academia’s struggle to keep up with industry support in AI research. Top voices like Rafael Rafailov and clem call for more support in academia. XY Han and Lucas Beyer provide context on Stanford’s GPU resources.

- Fei-Fei Li points out Stanford lab has only 64 GPUs

- Debate on academia’s struggle to match industry support in AI research

- Calls for more support for academia in AI

- Stanford’s shared GPU resources highlighted by XY Han and Lucas Beyer

Get insights on cutting-edge research in AI from top voices such as Santiago, Sayak Paul, Ken Liu, Prince Canuma, and Tom Hosking at ICLR. From production application testing to data contamination work and continued pre-training experiments, the AI community is buzzing with innovation and discoveries.

- Santiago shares learnings from setting up a recommendation model in a production application.

- Sayak Paul highlights the impact of text guidance on diffusion models and a must-read paper from ICLR’24.

- Ken Liu announces his presence at ICLR to discuss data contamination work and his upcoming exploratory work on fairness of LoRA.

- Prince Canuma teases a new series on continued pre-training with a focus on a 1:1 ratio and upcycling.

- Tom Hosking invites attendees to learn about using human feedback for evaluating/training LLMs and shares findings on the under-representation of factuality in LLM output.

Catch up on the latest episodes from top voices in the industry, covering topics like RAG, managing MLE teams, and male contraception. Don’t miss out on these insightful discussions!

- Podcasts featuring discussions on RAG, managing MLE teams, and male contraception

- @naomijowhite and Sandra Kublik collaborating on Episode 6

- TechCrunch’s Found podcast discussing male contraceptive with @kfrats from @contraline

Top voices including Weaviate • vector database, Tony Kipkemboi, Omar Khattab, Clint J., Chris, Erika Cardenas, and others shared highlights from the recent DSPy meetup. Topics included updates on Cohere’s RAG, generative feedback loops, and observability & ops. Recording now available!

- Top voices like Weaviate • vector database, Tony Kipkemboi, Omar Khattab, Clint J., Chris, Erika Cardenas, and more discussed latest developments in RAG and more at DSPy meetup

- Key topics included updates on Cohere’s RAG, generative feedback loops, and observability & ops

- Recording of the meetup now available for viewing

Stay updated with the latest happenings at #ICLR2024 conference in Vienna! Check out the schedule for the ME-FoMo workshop featuring top speakers and don’t miss the last poster session of the main conference. Exciting presentations by Cohere For AI and more!

- ME-FoMo workshop at #ICLR2024 featuring top speakers like @srush_nlp and @HannaHajishirzi

- Last poster session of the main conference happening now

- Check out algorithmic optimization work presented by @alex_shypula at Poster #255

- Exciting presentations by Cohere For AI with authors like @johnamqdang and @max_nlp

- Spotlight posters by Jindong Wang at poster 110 and 178

Exciting announcements from top voices in the AI industry including Bindu Reddy, Sasha Luccioni, and MilaQuebec. From democratizing AI to launching new initiatives and strategic partnerships, the industry is abuzz with innovation and collaboration.

- Bindu Reddy leads the charge in democratizing AI and unleashing the power of open-source in the industry.

- Sasha Luccioni, PhD introduces the Energy Star AI project to compare efficiency of AI models on different tasks.

- MilaQuebec partners with Linearis to support AI and drug discovery efforts in a $574M funding announcement.

Top voices such as DeepLearning.AI and Google AI are hosting workshops and conferences on cutting-edge AI technologies. From prompt engineering for vision models to discussing harmful tech with machine learning scientists, there’s a lot to look forward to. Don’t miss out on the latest innovations in deep learning at these events.

- DeepLearning.AI and Google AI are hosting workshops on prompt engineering and learning representations

- Discuss harmful tech with machine learning scientists at the Labor Tech Research Network Speaker Series event

- Join the Next Frontiers of Machine Learning Conference for insights from 20+ speakers and 3,500+ participants

- Stay updated with the latest in deep learning and AI by attending these free events

Microsoft has introduced LoftQ, a groundbreaking technology that enhances LLM fine-tuning using adaptive initialization. The new approach promises to revolutionize the field of AI and machine learning. Learn more about LoftQ and its impact on the industry from Multiplatform.AI. #AI #LLMfinetuning #Microsoft https://multiplatform.ai/microsoft-introduces-loftq-revolutionizing-llm-fine-tuning/

- Microsoft introduces LoftQ, a cutting-edge technology for LLM fine-tuning

- Adaptive initialization for improved performance in AI algorithms

- Revolutionizing machine learning with LoftQ’s innovative approach

- Visit the link for more details on LoftQ and its impact on the industry

Discover the latest developments in DSPy, a powerful open source programming and evaluation system for LLMs. Learn about Cohere’s advancements in RAG, new optimizers for LPs, and the analogies between DSPy and PyTorch. Dive into a new category of ML models with DSPy and enhance your LLM development skills.

- DSPy is a strong open source programming and eval system for LLMs

- Cohere’s latest developments in RAG discussed in DSPy meetup

- Analogies between DSPy and PyTorch for thinking about LLMs

- New category of ML models introduced with DSPy

- Enhance LLM development skills with DSPy

Experts like Armen Aghajanyan and Lucas Beyer raise concerns about xLSTM paper’s experimental methodology and Michael Kopp’s absence in affiliations. (((ل()(ل() ‘yoav))) admits nostalgia driving interest despite usual verification rule.

- Armen Aghajanyan points out potential issues with xLSTM paper’s experiments

- Lucas Beyer questions Michael Kopp’s absence from xLSTM paper’s affiliations

- (((ل()(ل() ‘yoav))) admits going against usual verification rule for xLSTM paper

Databricks introduces advanced GenAI applications with RAG framework, automating prompting and built-in reasoning. OpenAI’s update on max_tokens will impact RAG output. YouTube tutorials on DSPy by Mervin Praison offer practical applications and evaluation techniques.

- Databricks unveils production-quality RAG applications for advanced AI solutions #AI #AIsolutions #artificialintelligence #Databricks

- OpenAI’s update on max_tokens will affect RAG generated output in the future

- Mervin Praison’s DSPy tutorials on YouTube cover advanced AI RAG framework with auto reasoning and prompting

Watch as students enjoy learning algebra with the AI tutor, Archer, cracking equations and solving multi-step problems with ease. Discover how Archer guides students through challenging topics with patience and understanding. Join the #MathRocks and #EdTech revolution with ArcherAI!

- Students find joy in learning algebra with ArcherAI tutor

- AI tutor helps students solve multi-step math problems effortlessly

- Archer guides students through challenging topics with patience and understanding

- Join the #MathRocks and #EdTech revolution with ArcherAI

Explore the groundbreaking research on Kolmogorov-Arnold Networks (KAN) presented by ZimingLiu11. Learn how KANs outperform MLPs in fitting special functions and tackling catastrophic forgetting. Join the discussion on Zoom with insights from experts like Valeriy M., Hannes St\u00e4rk, and Rohan Paul. #NeuralNetworks #KAN #MLP #AI

- ZimingLiu11 to present ‘KAN: Kolmogorov-Arnold Networks’ on Monday, showcasing their potential

- KANs show lower train/test loss than MLPs in fitting special functions

- KANs offer a solution to catastrophic forgetting in machine learning

- Join the Zoom discussion with top voices like Valeriy M., Hannes St\u00e4rk, and Rohan Paul

AI4Prompts introduces a range of new prompts from AIArtists like MCLUCAS and Anouk Inspiration, including themes like Simple Storybook for Kids and Minimal Artwork Collage. A platform for selling and showcasing AI-generated prompts.

- AI4Prompts showcases new prompts from top AIArtists like MCLUCAS and Anouk Inspiration

- Themes include Simple Storybook for Kids, Minimal Artwork Collage, Basic Scrapbooking Parchment, and more

- A platform for AIArtists to sell and share their AI-generated prompts

- Visit AI4Prompts website to explore and purchase these creative prompts

Ian Goodfellow and Tengyu Ma discuss the evolution of adversarial examples in neural networks and the impact of non-differentiability on training. Machine Learning delves into the effects of weight norm on delayed generalization.

Top voices in AI Papers and Machine Learning are leading the way in graph classification with innovative approaches like Structural Compression, Heterophily incorporation, Feature Importance Awareness, Multi-scale Oversampling, and Hypergraph-enhanced Dual Semi-supervised methods.

- AI Papers and Machine Learning experts are revolutionizing graph classification techniques

- Innovative approaches like Structural Compression and Heterophily incorporation are being utilized

- Methods like Feature Importance Awareness and Multi-scale Oversampling are enhancing graph classification accuracy

- Top voices in the field are exploring Hypergraph-enhanced Dual Semi-supervised methods for improved results

LangChain praises Cohere Toolkit for advanced RAG app building, Akshay highlights Cleanlab’s trustworthiness scores for RAG responses. DeepLearning.AI offers free course on building Agentic RAG with Llama_Index. Akshay and Towards Data Science emphasize RAG evaluation importance.

- Cohere Toolkit by @cohere-ai lauded for beautiful UI and advanced RAG features

- Cleanlab’s Trustworthy Language Model assigns trustworthiness scores to RAG responses

- DeepLearning.AI offers free course on building advanced RAG systems with Llama_Index

- Akshay working on RAG evaluation using LightningAI Studio and ragas library

- Towards Data Science suggests using ragas library for synthetic evaluation data in RAG system development

Top voices in AI industry like Amir Efrati and Matt Wolfe are buzzing about OpenAI’s new model with advanced audio capabilities, surpassing GPT-4 Turbo in some queries. The company is set to reveal its AI Voice Assistant, competing with Google and Apple, in an upcoming event.

- OpenAI develops new AI model with audio capabilities, outperforming GPT-4 Turbo.

- Upcoming event to showcase OpenAI’s AI Voice Assistant, challenging Google and Apple.

- Industry experts like Amir Efrati and Matt Wolfe excited for OpenAI’s advancements in AI technology.

DeepSeek AI has released the DeepSeek-V2 model with 236B parameters, a 128k context window, and 21B active parameters. The paper and models are available on Hugging Face, showcasing top-tier performance in various benchmarks. Multiplatform.AI praises the adaptability and efficiency of DeepSeek-V2. Top voices like Philipp Schmid and Rohan Paul commend the model’s advancements in AI technology.

- DeepSeek AI releases DeepSeek-V2 model with impressive parameters and context window

- Paper and models available on Hugging Face, showcasing top-tier performance in benchmarks

- Multiplatform.AI highlights the adaptability and efficiency of DeepSeek-V2

- Praise from industry experts Philipp Schmid and Rohan Paul for advancements in AI technology

Top voices in machine learning, including François Chollet and Omar Khattab, discuss the limitations of deep learning models as curves fitted to data distribution and the role of synthetic data generation in improving training data quality. They highlight the importance of understanding the training distribution for model performance and the potential benefits of synthetic data as a denoising process.

- Top ML experts discuss the limitations of deep learning models and the need for intelligence in solving tasks outside of the training distribution

- Synthetic data generation is valuable for improving training data quality by acting as a denoising process

- Understanding the role of data distribution and synthetic data in ML models is essential for improving performance and addressing challenges in training data quality

Join industry leaders like Weights & Biases, DeepLearning.AI, and Bindu Reddy in exploring the latest advancements in Language Model fine-tuning and application. From practical webinars to hands-on workshops, unlock the potential of LLMs in boosting business and enhancing data science skills.

- Exciting Applied AI course by Hamel Husain and Dan Becker for Data Scientists and Software Engineers.

- Short course by LangChainAI CEO on calling functions in LLMs for structured data handling.

- Webinar with MSFT Reactor on leveraging LLMs for business success, featuring practical tips.

- Fine-tune open source LLMs for specific domains easily with Bindu Reddy’s tool.

- Join ACM TechTalk with rasbt on understanding the LLM Development Cycle, moderated by Marlene Zw from Microsoft.

Google Duplex, the first human-like voice assistant, was demo-ed almost 6 years ago, and everyone loves to anthropomorphize AI. Colin McNamara shares how to enhance your AI chatbot with speech recognition and voice output. Data Science Dojo explores the complex world of AI and how to build your own chatbot project.

- Anthropomorphizing AI with Google Duplex

- Enhancing AI chatbots with speech recognition and voice output

- Exploring the world of AI and building chatbot projects

- Featured top voices: Colin McNamara, Data Science Dojo

AI news

AlphaFold 3

Llama 3-70b

Open Source AI

xLSTM

Deep Learning

AI Revolution

Industry Insights